InteracTable is an interactive musical tabletop designed by Antonella for her BSc project in 2010.

The aim of the project was to explore and evaluate tangible and multi-touch interaction within tabletop interfaces. Users interact by moving tangible objects and drawing glyphs on the table’s surface. The motions performed on the table are recognized through a Machine Learning (ML) algorithm and then mapped into a sound synthesis. Each user interaction also produces animations on the interface for a delightful and engaging experience.

The interacTable was entirely designed, built, and programmed by Antonella in Processing and C++, and used the reacTIVision and TUIO open source framework. Antonella also wrote and trained the AI/ML algorithm able to recognize and classify the different user drawings that enabled the multi-touch interaction.

Video of a user exploring the interacTable

Pictures and early sketches of the prototype

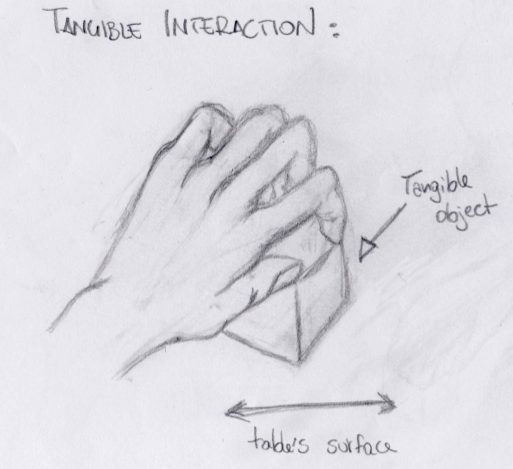

representation of a tangible interaction on the interacTable

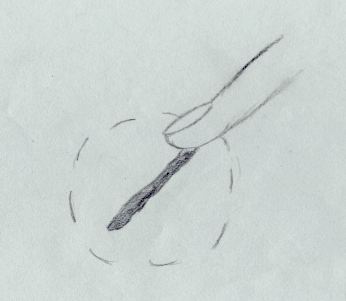

representation of a multi-touch interaction on the interacTable

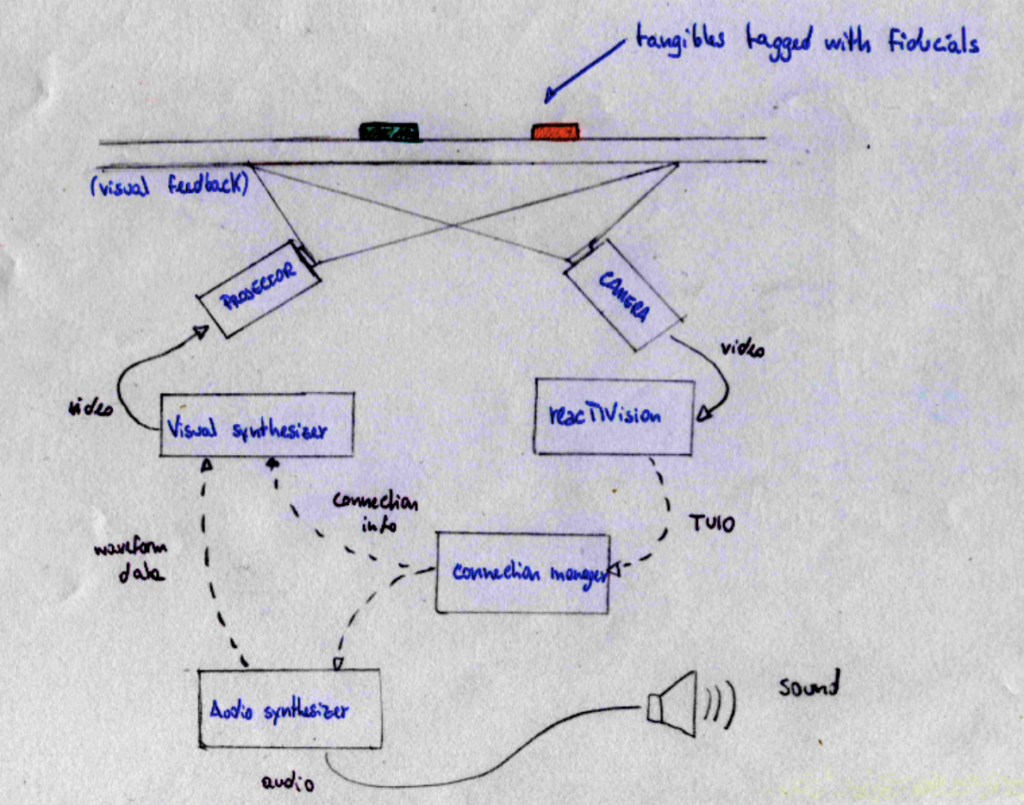

tabletop architecture

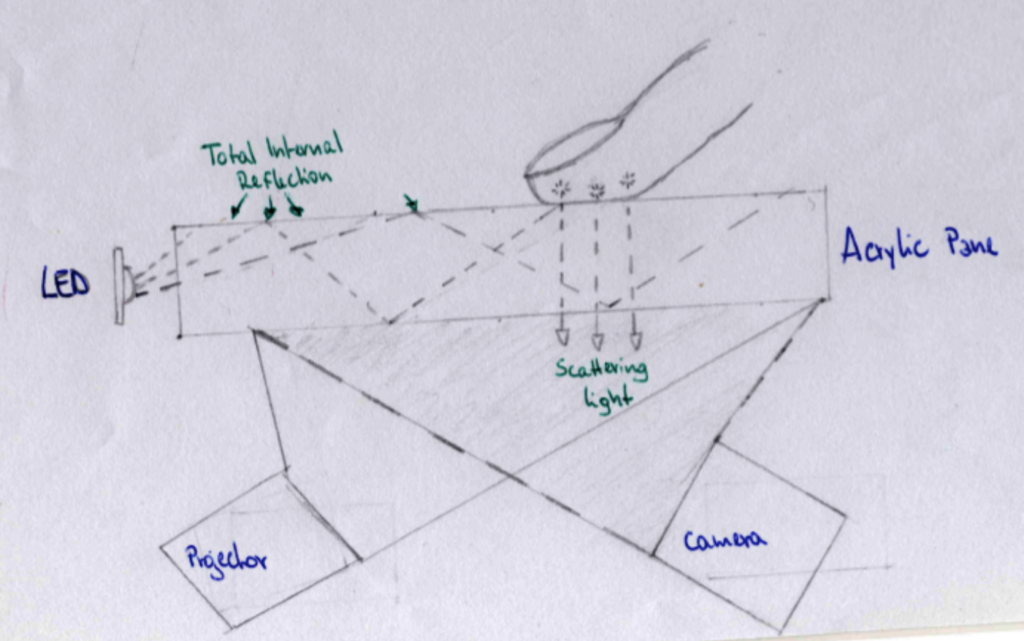

the Frustrated Total Internal Reflection (FTIR) technique implemented to identify touch interactions